Published

OCR (Optical Character Recognition) is the process of taking an image of text and making it “machine readable” or “live.” The software views (or reads) the image (Optical), scans for text (Characters), and then recognizes the relevant characters and outputs them as live text (Recognition). This process has been around for a few decades, but it still is very much a work in progress.

While the quality of current OCR software (and the concept of OCR itself, if we are being honest here) is nothing short of amazing, it is still an imperfect last resort for publishers. However, it does not have to be the nightmare that many of us imagine it to be.

How OCR Works

In its current form, OCR is achieved by running sophisticated computer software that utilizes matrix matching (or “pattern matching”) and feature extracting (or “feature detection”) on an image or a series of images in order to determine which pieces of the image should become live text and which characters those pieces should become. The software first scans the image and compares the patterns that it finds to a series of stored glyphs, looking for matches as it goes. This is the pattern-matching portion, and it focuses on characters in their entirety.

The main problem with pattern matching based on entire characters is the font used. Since different fonts render characters differently and may vary considerably from the stored glyphs, pattern matching is prone to mistakes. This is what led to the development of feature detection.

The feature-detection phase is the more advanced form of character recognition. To achieve better accuracy, feature detection focuses on the pieces of the characters (its “features”) rather than the character as a whole. For example, it does not consider a capital letter M to be a single character. Instead, it considers M to be four individual lines that have three meeting points (top left, top right, middle bottom). Most modern OCR programs rely more heavily on feature detection than pattern matching, which allows them to be omnifont and achieve a greater level of character accuracy. Still, the variation in fonts, letter spacing, ink saturation, and other factors cause a number of problems.

OCR Issues and Shortcomings

The OCR process results in numerous problems, including lost text and incorrect words. The following are some of the variables that affect the ability of a computer to utilize the contrast, letter spacing, and letter shape to recognize characters and connect them to words:

- The condition of the original source

- The quality or color of the paper

- The scanner used to create the images

- The quality and resolution of the image being scanned

- The language, font size, and line spacing of the text

- The use of ligatures

- The OCR software (different packages are better for different types of text)

In short, you are not going to be able to put the scans of your 1820 copy of The Monastery through your OCR software and expect the output to be immediately publishable. Even with the best software available, there are going to be word and character issues throughout the book.

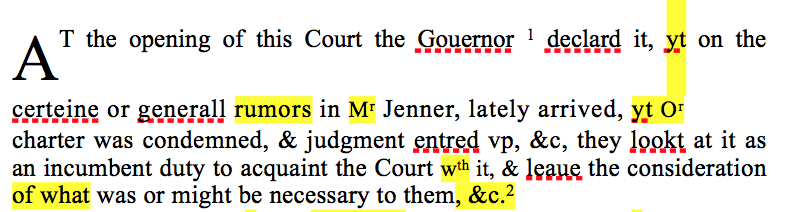

You may even see output that looks like this:

Some materials are not good candidates for OCR, which is common for titles printed earlier than the mid-1800s (ironically, since those are the titles most likely to need OCR). Most titles, though, can go through the process more successfully, but the initial output rarely hits the level of accuracy that publishers expect.

However, there are ways to improve the initial output to achieve higher specification. The key is to have a detailed and organized plan (and clear level of expectation) for every stage of the OCR process. Before even considering OCR, you need to know what you have and its quality (our list is a good place to start when creating your checklist), what you expect to receive after OCR, and what your plan is for evaluating those results and correcting the errors in the output (more on this in the OCR Standards and OCR Verification sections).

Reading and Interpreting the Output

Whether you plan to use OCR software in-house or send the work out to a vendor, it is important to know what you are going to receive. You are likely to find OCR software that boasts an accuracy rating anywhere from 95 percent to 99 percent—but those numbers can be deceiving!

Those percentages are based on high-quality scans of titles that are not complex, not on low-quality scans of highly complex or ancient texts. Also, those numbers are based on character accuracy, not word accuracy. You may receive a file back that claims to be 97 percent accurate, but less than 80 percent of the words may be spelled correctly. While character counts and word counts have a correlative relationship, they are certainly not interchangeable numbers; the word count will always be significantly lower than the character count, meaning the word accuracy will always be lower than the character accuracy. Eighty percent may be OK if we were government agents intercepting enemy intel and only needed to extract the important information or if we were merely using OCR to streamline some basic data entry, but a book only 80 percent accurate in the publishing industry means both time and money—two things we do not generally have in excess.

Making the output even more difficult to check for correctness are “legal word errors.” Legal word errors are words that do not match the input (the source material) but are not misspelled and therefore will not be found by a spell check. Examples of legal word errors are ease instead of case, churl instead of church, or (our favorite) Cod instead of God.

Another common error occurs due to what we call “character elision.” Character elision is when letter pairs and individual letters are confused by the software. The most frequent examples are cl and d or rn and m. These types of errors occur any time pairs of letters are shaped similarly to other letters (vv for w, ol for d, li for h, nn for m). The words that result from character elision never match the source text but are valid words. Typical errors include modern for modem, arm for own, and dove for clove.

Since a standard spell check will not find these errors, they have to be discovered in different ways, which is why it is important to have defined OCR standards and a clear and meticulous OCR verification process.

OCR Standards

Two essential elements to the character recognition process are scanning and recognition—the quality of the scan and the accuracy of the recognition. Without high-quality scans at an acceptable resolution (we recommend 300 DPI), an acceptable output is less likely.

We recently made our OCR Standards page public so that even nonsubscribers can see what we consider to be acceptable output. We adhere to these standards internally when working on any project that includes OCR, and these are our official expectations for any OCR output.

As you can see on that page, we expect the OCR to do the following:

- Output at 99.995 percent accuracy (which could mean an average of one error every two printed pages—though at Scribe, we expect fewer mistakes)

- Preserve character styles

- Use Unicode characters throughout (any combining Unicode should be resolved to a single character)

- Retain all punctuation, including the ability to differentiate between hyphens and dashes

- Maintain paragraph integrity without using soft returns

- Resolve line-breaking hyphens

- Capture all images and place callouts in the correct location

OCR Verification

The third essential element to go along with scanning and recognition is verification. The verification process is the most critical step in the entire OCR procedure, as it determines whether or not everything leading up to this point was a success and aims to ensure that the output is 100 percent correct (or as close as is realistically possible based on the input). While the OCR standards establish the expectations, our verifications procedures at Scribe confirm the accuracy. The intention of OCR verification, like the goal of quality control, is to confirm that the output matches both our established standards and the source.

Since our published standards require 99.9 percent accuracy, any file found to be below that benchmark will be rejected and cannot continue through the verification process. If a file meets that accuracy requirement, then the OCR verification will include both confirming the accuracy and correcting any errors that are found.

In order for a file to pass through our verification process, we have a few different steps to confirm that the file meets the benchmark. For starters, we have to confirm that all the text is present and in the correct order. Then we check for paragraph integrity and character style issues. From there, we run a full spell check before exporting all the content into a text editor and running a series of searches we have developed to check for commonly seen OCR errors.

Every file has to pass through each stage before we can perform the full OCR verification and correction process to confirm that there are no longer any known errors in the file. Like everything else OCR related, the verification procedure is not quick and painless. No one procedure will unveil all the errors in the file—as mentioned when discussing legal word errors—so the full verification process involves a variety of methods for confirming accuracy and finding errors. Even a file that has virtually no errors will take a few hours to verify, but it is time well spent—your output will have fewer errors and will be closer to being publishable.

Our full and official OCR verification process is only available to subscribers of our Well-Formed Document Workflow, but these are the basics of what we expect for any OCR project. In order to get the most out of the OCR conversion and to avoid the issues that can make such projects a disaster, it is important to establish higher standards at the very beginning.